📔 [Lec1] Neural Networks & Deep Learning

Shallow Neural Network

3주 차 목표

- Describe hidden units and hidden layers

- Use units with a non-linear activation function, such as tanh

- Implement forward and backward propagation

- Apply random initialization to your neural network

- Increase fluency in Deep Learning notations and Neural Network Representations

- Implement a 2-class classification neural network with a single hidden layer

3주 차 학습 내용

- 지난 시간에 Logistic Regression에 대해 학습했다

- 이번 주는 얕은 신경망을 쌓고 구현하는 시간을 갖는다

- Neural Network란 무엇인가

- Weight 초기화를 어떻게 해야할까

- Vectorization을 통해 여러 샘플들을 어떻게 학습시킬 수 있는가

- Activation Function을 사용하는 이유와 종류에 대해 알아본다

↓ 2주 차 Logistic Regression에 대한 정리노트는 아래의 링크에서 확인해 볼 수 있다

[Deep-Special] [Lec1] Week2. Logistic Regression as a Neural Network (Last Update-20.11.02.Mon)

📔 [Lec1] Neural Networks & Deep Learning Deep Learning Specialization Course의 첫 번째 강의 'Neural Networks & Deep Learning'의 2주차 과정입니다. 2주차 목표 순전파, 역전파 로지스틱 회귀분석 비용 함..

deepinsight.tistory.com

1. Computing a Neural Network's Output

- 1개의 Feature Vector가 주어지면 코드 4줄로 Single Neural Network를 계산할 수 있다

- Logistic Regression을 구현했던 것과 마찬가지로 Vector화를 통해 트레이닝 샘플을 학습시킬 수 있다

- Vectorization → Whole Training Sample을 학습시킬 수 있다

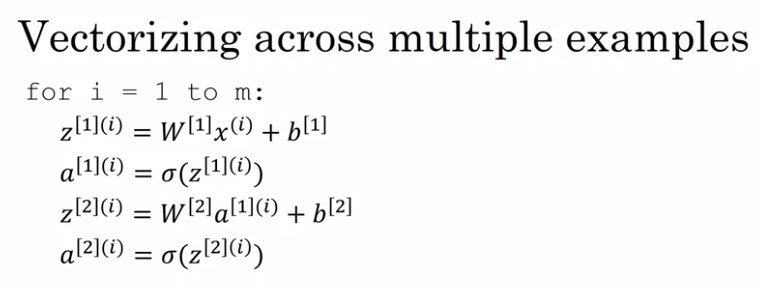

2. Vectorizing across multiple examples

e.g a[2](i): 첫 번째 샘플 2번째 레이어의 값

- m개의 training sample에 대해 Vector화를 해보자

위의 알고리즘은 앞서 1번에서 말했던 코드 4줄로 Neural Network 구현할 수 있다는 것을 보여준다.

3. Activation Functions

- 일반적으로 좋은 성능을 내는 활성화 함수가 모든 문제에서 최선의 결과를 가져다주지는 않는다

- 활성화 함수가 최상의 결과를 내는지 모르겠다면, 전부 시도해보고 평가해보는 것을 추천한다

Then... Why do we need non-linear activation functions?

4. Why do you need non-linear activation functions

- Non-linear Activation Function이 없다면 여러 layer를 쌓아도 linear function과 같아진다

- Activation을 쌓아야 하는 '이유'를 알면 뉴럴넷의 학습 과정이 더 재밌어질 것이다

5. Backpropagation Intuition

6. Random Initialization

- Why? - 마찬가지 weight값을 랜덤하게 초기화하는 이유를 아는 것이 중요하다

✅ 왜 weight를 작은 값으로 초기화 하는건가?

- 만약 w가 크다면 z값도 커질 것이고

- 그렇게 되면 sigmoid 함수나 tanh 함수가 Saturation 될 것이다

- 따라서 작은 수로 weight를 초기화하는 것이 일반적이다

✅ weight를 큰 값으로 초기화하면 안 되는 건가? (이 경우도 한 번 생각해보자)

- weight값을 큰 값으로 초기화할 경우 sigmoid function과 tanh function의 결과 값이 큰 값을 같게 된다

- 따라서 Backpropagation 수행 시 기울기가 0에 weight값이 같이 업데이트되기 때문에 학습이 느려질 것이다(최적화 알고리즘의 수행이 느려진다)

- 이를 Saturation이라고 한다

Summary

- 지난 시간에는 Deep Learning의 기본이라고 할 수 있는 Logistic Regression과 Cost Function에 대해 학습했다

- 이번 시간에는 Shallow Neural Network라는 1개의 hidden layer를 갖는 얕은 신경망을 직접 구현해 봤다

- 구현 과정에서 필요한 지식으로는 여러 트레이닝 데이터를 한 번에 계산할 수 있는 'Vectorization'와 Activation Function에 대한 개념이 필요하다

- Assignment로는 Shallow Neural Network를 통한 Binary Classification 과제가 주어진다

- Network를 정의하고, Weight를 초기화하고, Forwardprop/Backprop 계산을 하고, Weight를 업데이트해주는 일련의 프로세스를 기억하도록 하자

- 과제를 구현하는 과정에서 수업시간에 명확하게 이해가 가지 않았던 부분을 이해할 수 있었던 것 같다

- 이렇게 3주 차까지 빠르게 달려왔다. 앞으로의 시간이 더 기대된다 -20.11.08.Sun.pm5:50-

Reference

심층 학습

Learn Deep Learning from deeplearning.ai. If you want to break into Artificial intelligence (AI), this Specialization will help you. Deep Learning is one of the most highly sought after skills in tech. We will help you become good at Deep Learning.

www.coursera.org

댓글