안녕하세요 Steve-Lee입니다. 한창 졸업 논문을 써야 할 이 시점. Deep Learning Specialization Course가 너무 재밌어서 빠져있습니다... 하하...

Optional이기에 선택사항이지만, 제대로 베이스를 쌓고자 한 번 시도해봤습니다. 앞으로 많이 사용하게 될 Numpy와 딥러닝을 위한 Python을 충분히 연습할 수 있었던 시간이었습니다.

과제 개요

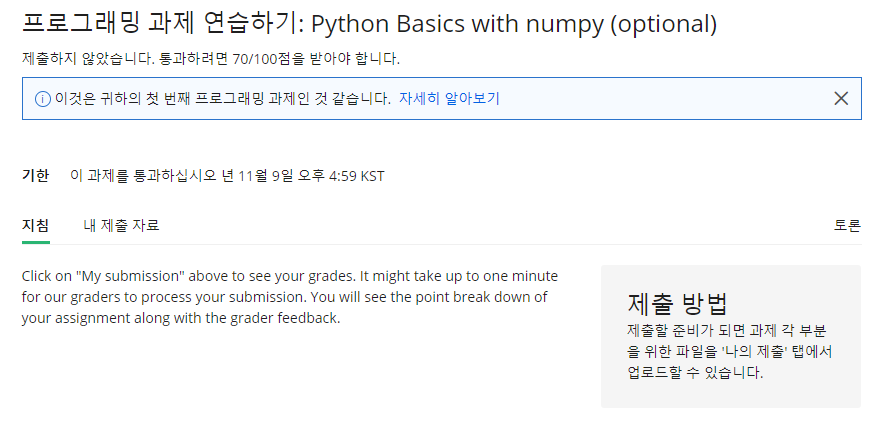

프로그래밍 과제 연습하기: Python Basics with numpy(optional)

- 2주 차 과제로 Optional(선택 가능한) 과제이다

- 통과하려면 70점 이상 받아야 하며 제출 방법이 따로 있는 것 같다

- 아래와 같은 가이드라인이 있다

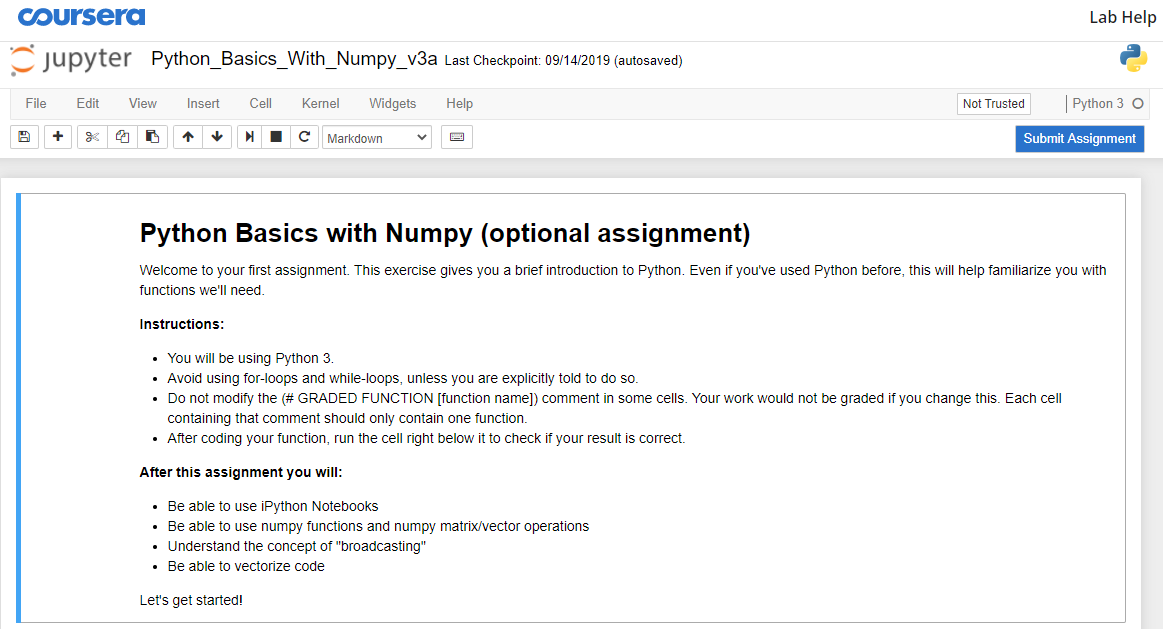

Python Basics with Numpy (optional assignment)

- 파이썬을 아는 사용자에게도 유용한 Tutorial이 될 것입니다

- Instruction

- Avoid for loop and while-loops

- After coding your function, run the cell right below (it will be check if your result is correct)

- After this Assignment

- Be able to use iPython Notebooks

- Be able to use numpy functions and numpy matrix/vector operation

- Understand the concept of "broadcasting"

- Be able to vectorize code

1. Building basic functions with numpy

Assignment1. Sigmoid Function

sigmoid(x)= 1 / (1 + e(-x))

-

Actually, we rarely use the "math" library in deep learning because the inputs of the functions are real numbers. In deep learning we mostly use matrices and vectors. This is why numpy is more useful

- Again, One reason why we use "numpy" instead of "math" in Deep learning is that in deep learning we mostly use matrices and vectors

-

In fact, if x=(x1, x2,..., xn) x=(x1, x2,..., xn) is a row vector then np.exp(x) np.exp(x) will apply the exponential function to every element of x. The output will thus be: np.exp(x)=(ex1,ex2,...,exn)np.exp(x)=(ex1,ex2,...,exn)

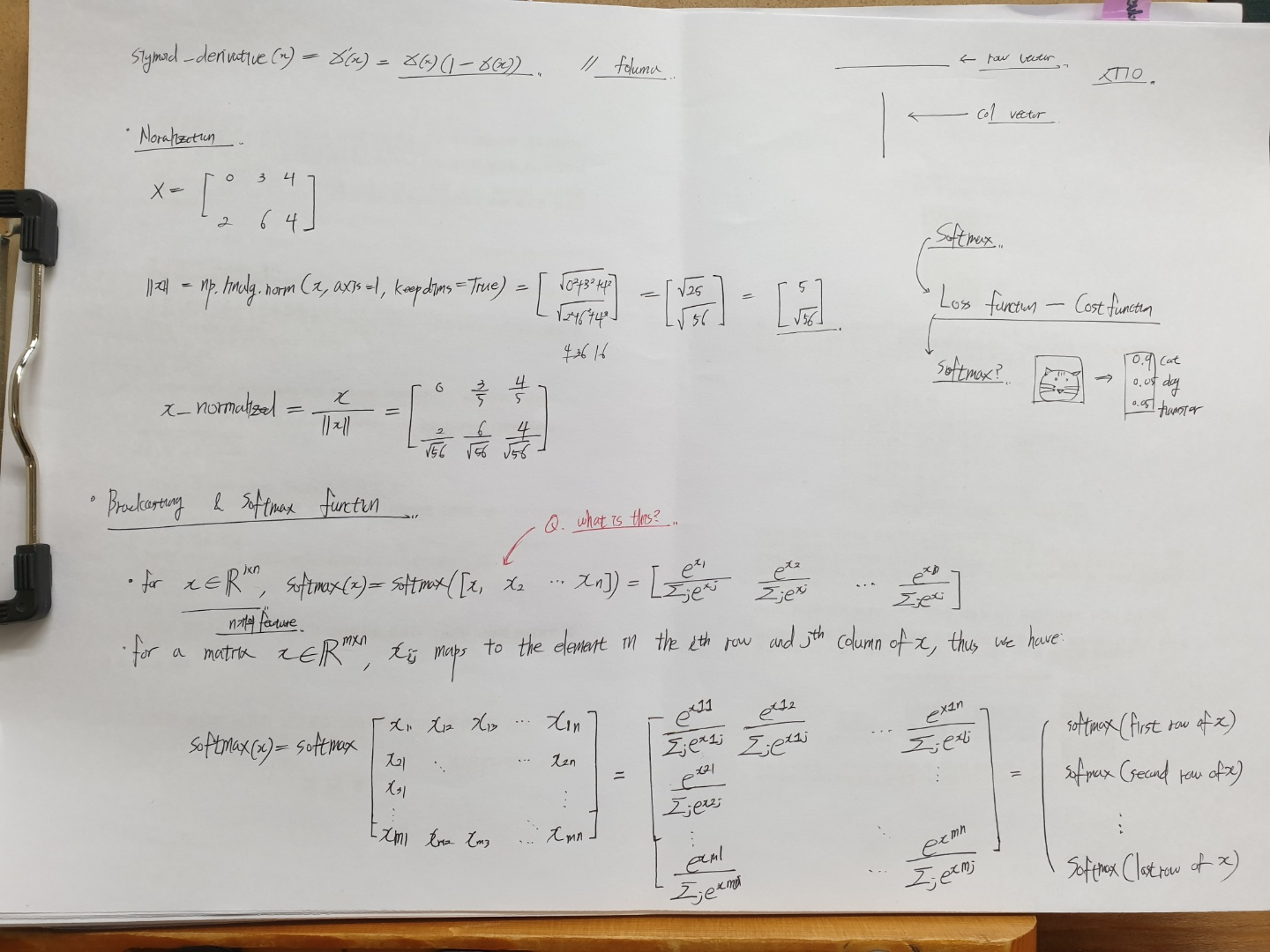

Assignment1-2. Sigmoid gradient

sigmoid_derivative(x)=σ′(x)=σ(x)(1−σ(x))(2)

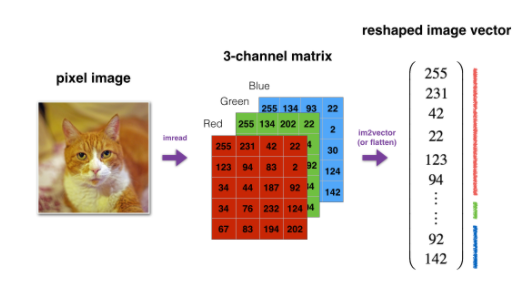

1.3 - Reshaping arrays

Two common numpy functions used in deep learning are np.shape and np.reshape().

-

X.shape is used to get the shape (dimension) of a matrix/vector X.

-

X.reshape(...) is used to reshape X into some other dimension.

-

In computer science, we have to convert Image to vector shape (length * height * 3, 1)

1.4 Normalizing rows

- Another common technique we use in Machine Learning and Deep Learning is to normalize our data

- It often leads to a better performance because gradient descent converges faster after normalization.

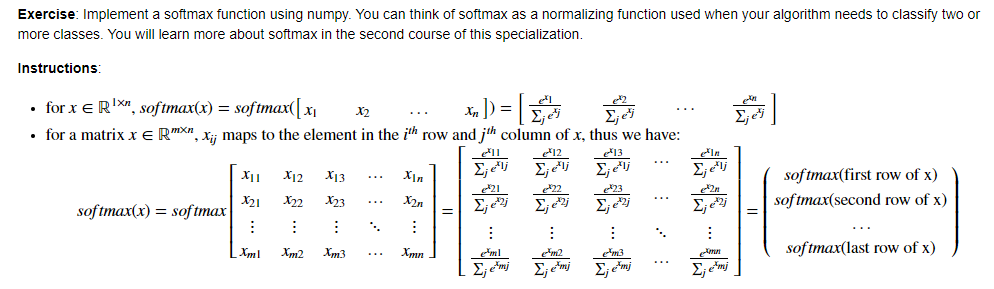

1.5 Broadcasting and the softmax function

- A very important concept to understand in numpy is broadcasting

- It is very useful for performing mathematical operations between arrays of different shapes

2.1 Implement the L1 and L2 loss functions

-

Implement the numpy vectorized version of the L1 loss.

-

You may find the function abs(x) useful

-

L1 Loss

-

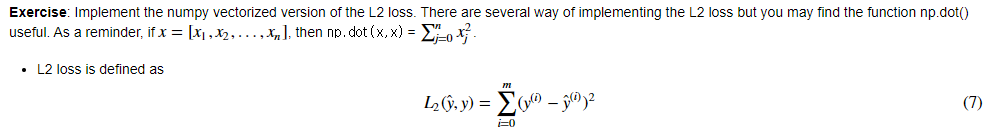

L2 Loss

- Exercise: Implement the numpy vectorized version of the L2 loss. There are several way of implementing the L2 loss but you may find the function np.dot() useful. As a reminder,

끝!

하나하나 해결해 나가면 여러분도 해결하실 수 있으리라 생각합니다! 파이팅!

댓글