📔 [Lec1] Neural Networks & Deep Learning

Deep Learning Specialization Course의 첫 번째 강의 'Neural Networks & Deep Learning'의 2주차 과정입니다.

2주차 목표

- 순전파, 역전파

- 로지스틱 회귀분석

- 비용 함수(Cost Function)

- 경사하강법(gradient descent)

Goal

- Build a logistic regression model structured as a shallow neural network

- Build the general architecture of a learning algorithm, including parameter initialization, cost function and gradient calculation, and optimization implementation (gradient descent)

- Implement computationally efficient and highly vectorized versions of models

- Compute derivatives for logistic regression, using a backpropagation mindset

- Use Numpy functions and Numpy matrix/vector operations

- Work with iPython Notebooks

- Implement vectorization across multiple training examples

Logistic Regression

- Why? - Logistic Regression을 왜 사용하는 걸까?

- What? - Logistic Regression이란 무엇일까?

- How? - 어떻게 구할 수 있을까?

- Answer

- Why?

- Logistic Regression은 Supervised Learning Problem을 해결하기 위해 고안되었다. (Binary Classification)

- What?

- Logistic Regression의 목표는 무엇일까?

- 0과 1로 분류하는 문제가 주어졌을 때(y = {0, 1}) 클래스를 예측하는 것이다. e.g 고양이인가 vs 고양이가 아닌가. 스팸인가 vs 햄인가. 감염인가 vs 감염이 아닌가 등 다양한 예시를 들 수 있겠다

- 훈련 데이터와 모델의 예측간의 에러를 줄이는 것이다.

- How?

- linear function에 sigmoid 함수를 씌워 결과값이 0에서 1사이가 되도록 만들어준다

- Why?

Logistic Regression Cost Function

What is the difference between the cost function and the loss function for logistic regression?

✅ Loss Function과 Cost Fuction의 차이를 말할 수 있어야한다

위의 수식과 같이 Loss Function과 Cost Function의 가장 큰 차이는 실제값과 예측값의 차이를 하나의 데이터에 대해서만 생각하느냐 또는 전체 트레이닝 데이터에 대해서 생각하느냐에 달려있다. 정리하면

- Loss Function이 Single Training Example에 대한 계산이라면

- Cost Function은 전체 트레이닝 세트에 대한 평균값을 계산한 것이다

So the terminology I'm going to use is that the loss function is applied to just a single training example like so. And the cost function is the cost of your parameters. So in training your logistic regression model, we're going to try to find parameters W and B that minimize the overall costs function J written at the bottom.

Derivatives(미분)

✅ 미분

- 미분의 직관 ⇒ Slope(기울기) 라고 생각하자

- 함수값이 직선일 경우에는 어느 위치에서든 미분값이 같지만 곡선 또는 다른 경우에는 위치에 따라 미분값이 달라질 수 있다

|

|

Logistic Regression Gradient Descent

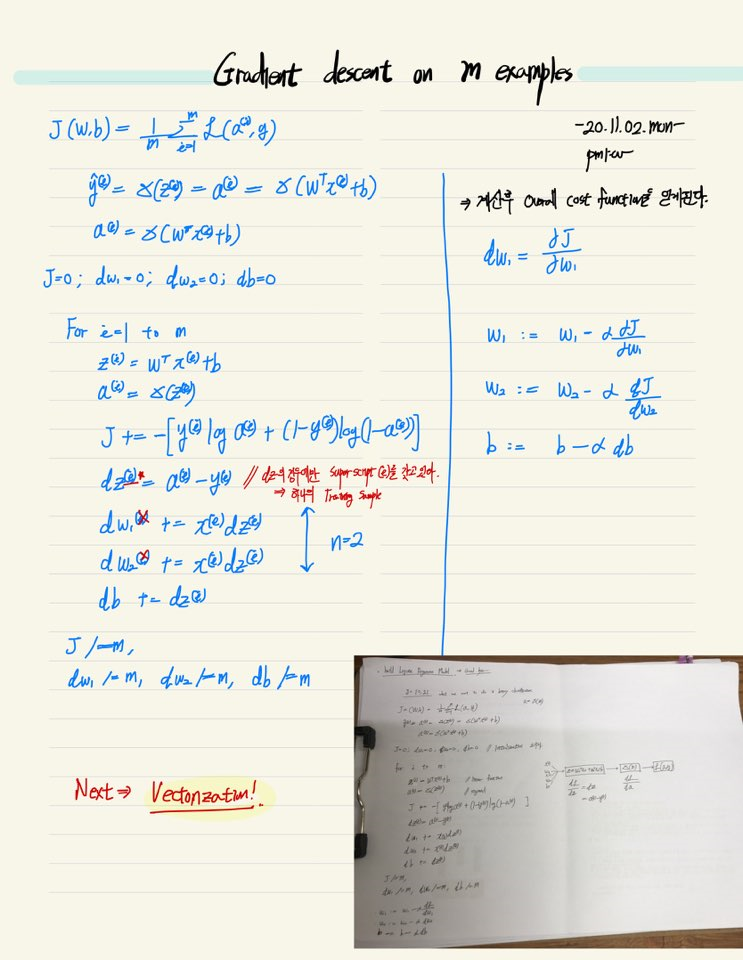

Logistic Regression on m examples

More Vectorization Examples

Vectorizing Logistic Regression

Steve-YJ/Data-Science-scratch

Learning Data Science from scratch. Contribute to Steve-YJ/Data-Science-scratch development by creating an account on GitHub.

github.com

Reference

심층 학습

Learn Deep Learning from deeplearning.ai. If you want to break into Artificial intelligence (AI), this Specialization will help you. Deep Learning is one of the most highly sought after skills in tech. We will help you become good at Deep Learning.

www.coursera.org

✅ End! -20.11.03.Tue- :)

✅ Update! -20.11.15.Sun am 7:00 - :)

'Coursera > Deep Learning Specialization' 카테고리의 다른 글

| [Deep-Special] [Lec1] Week3. Shallow Neural Network (0) | 2020.11.08 |

|---|---|

| [Deep-Special] [Lec1] Programming Assignment - Logistic Regression with a Neural Network mindset (0) | 2020.11.04 |

| [Deep-Special] [Lec1] Week2. Python Basics with numpy(Optional) (0) | 2020.11.04 |

| [Deep-Special] [Lec1] Week1. Neural Networks and Deep Learning (0) | 2020.11.02 |

| [Coursera] 시작하기 Financial Aid (5) | 2020.11.02 |

댓글